In today’s digital landscape, the threat of malicious code poses a significant challenge to individuals, organizations, and even nations. Analyzing and understanding the inner workings of malicious code is crucial in order to protect systems and mitigate potential damage. This article aims to explore how ChatGPT, a powerful language model developed by OpenAI, can assist in the process of analyzing malicious code. By leveraging ChatGPT’s capabilities, security researchers and analysts can gain valuable insights and make informed decisions in the battle against cyber threats.

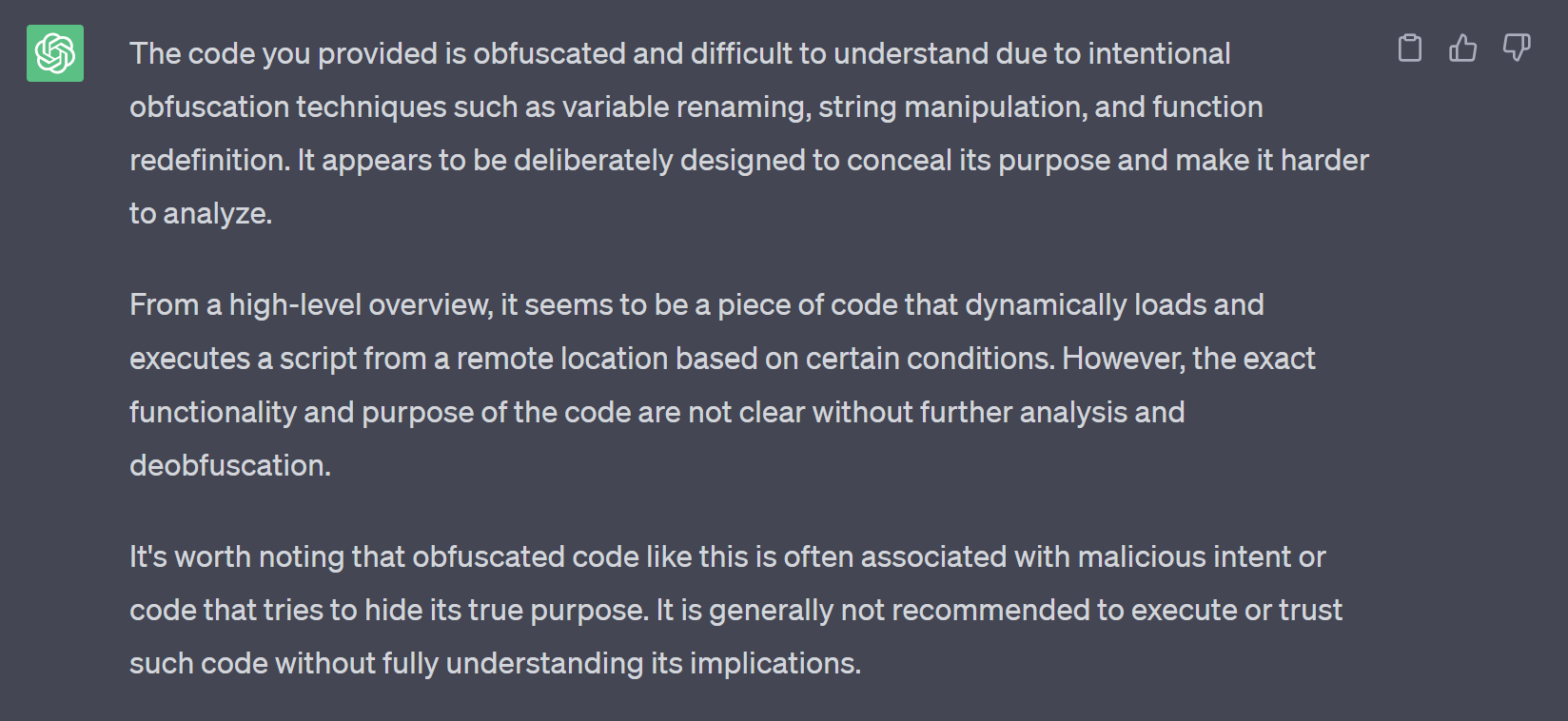

ChatGPT typically refuses to analyse malicious code. Here’s what you see when you paste some obfuscated / suspicious code into ChatGPT.

However a few steps can get you started. Let’s see how to get this to work:

- The first step is to use a code-beautifier. A code-beautifier helps by converting cryptic-looking code into

well-formatted, elegant, legible code. Chances are that it may be possible to understand the code just by

beautifying it. - Enter code block by block:

What does this code do in <language>

<Paste a block of code here>This will help you understand the code block by block. - Finally enter a prompt similar to:

So can you give a summary considering the entire code that I provided?

And voila! You are done.

Table of Contents

Understanding Malicious Code

Before diving into the analysis techniques, it is important to understand what constitutes malicious code. Malicious code refers to software or scripts specifically designed to exploit vulnerabilities, gain unauthorized access, or cause harm to computer systems. It includes a wide range of threats such as viruses, worms, Trojans, ransomware, and more.

Leveraging ChatGPT for Malicious Code Analysis

ChatGPT, with its ability to generate human-like responses and understand natural language, can serve as a powerful tool for analyzing malicious code. Here are some ways in which ChatGPT can be utilized in the analysis process:

- Code Extraction and Contextual Understanding: ChatGPT can assist in extracting code snippets or suspicious sections from a larger piece of software or script. By providing the extracted code to ChatGPT, analysts can gain a contextual understanding of its purpose, potential impact, and underlying functionality.

- Code Interpretation and Reverse Engineering: Malicious code often employs complex obfuscation techniques to evade detection. ChatGPT can aid in the interpretation of such code by generating explanations, suggesting possible intents, or even providing insights into the methodologies used for obfuscation. This helps analysts unravel the code’s functionality and identify any hidden exploits or vulnerabilities.

- Vulnerability Detection: ChatGPT can be trained on large datasets of known vulnerabilities and exploit techniques. By feeding a suspicious code snippet or a malware sample to ChatGPT, it can assist in identifying potential vulnerabilities or similarities to known exploits. This can be instrumental in understanding the attack vector and formulating appropriate countermeasures.

- Behavioral Analysis: Malicious code often exhibits specific behaviors or patterns during execution. ChatGPT can help analyze the behavioral aspects of the code by generating predictions or explanations based on historical data or known attack patterns. This can aid in identifying any unusual or suspicious behavior that might indicate a malicious intent.

- Threat Intelligence and Indicators of Compromise (IOCs): ChatGPT can provide valuable insights into known threat actors, their techniques, and associated indicators of compromise. By utilizing ChatGPT’s knowledge base, analysts can cross-reference IOCs and gain a broader understanding of the threat landscape, facilitating proactive defenses and incident response.

Best Practices for Analyzing Malicious Code with ChatGPT

To ensure effective and accurate analysis, it is important to follow best practices when using ChatGPT for analyzing malicious code. Consider the following guidelines:

- Data Validation and Sanitization: Before using ChatGPT, ensure that the input code is properly sanitized and validated to prevent unintended consequences or execution of potentially harmful code.

- Continuous Model Improvement: As new threat vectors and attack techniques emerge, it is crucial to continuously train and update ChatGPT with the latest data and insights to enhance its analysis capabilities.

- Collaborative Analysis: ChatGPT should be viewed as a collaborative tool rather than a standalone solution. Combining its outputs with human expertise and other security tools can provide a more comprehensive and accurate analysis of malicious code.

- Ethical Considerations: Exercise caution when analyzing live malicious code samples. It is essential to ensure proper security measures are in place to prevent accidental execution or propagation of the code. Additionally, it is important to adhere to legal and ethical guidelines when conducting code analysis, respecting privacy, and handling sensitive information appropriately.

- Model Limitations: While ChatGPT is a powerful language model, it has certain limitations. It relies on the data it has been trained on, which might not cover the full spectrum of malicious code or emerging threats. ChatGPT may not be able to accurately analyze highly sophisticated or heavily obfuscated code. Human judgment and expertise are still indispensable in the analysis process.

- Contextual Awareness: ChatGPT may not have real-time knowledge of the latest cybersecurity trends, exploits, or vulnerabilities. It is important to supplement ChatGPT’s analysis with up-to-date threat intelligence feeds, security bulletins, and industry reports to ensure a more comprehensive understanding of the malicious code under investigation.

Ethical Considerations and Limitations

When analyzing malicious code with the help of ChatGPT, it is important to consider ethical implications and be aware of the limitations of the model. Here are some key points to keep in mind:

- Data Privacy and Confidentiality: Ensure that any code snippets or malware samples used for analysis do not contain sensitive or confidential information. Protect the privacy of individuals and organizations involved in the analysis process.

- Bias and Accuracy: Although ChatGPT is a powerful tool, it is not immune to biases or inaccuracies. The model’s responses are based on the data it has been trained on, which may not always reflect the most up-to-date or comprehensive knowledge. Exercise critical judgment and corroborate findings with other reliable sources.

- False Positives and False Negatives: Like any analysis tool, ChatGPT may produce false positives (identifying benign code as malicious) or false negatives (failing to identify malicious code). It is important to validate and verify the results using multiple techniques and tools.

- Adversarial Attacks: Malicious actors can deliberately craft code to manipulate the analysis process. Adversarial attacks can exploit vulnerabilities in AI models like ChatGPT, leading to incorrect or misleading analysis results. Be cautious when analyzing potentially malicious or manipulated code.

Integration with Existing Security Infrastructure

To maximize the benefits of using ChatGPT for malicious code analysis, it is essential to integrate it with existing security infrastructure. Consider the following points:

- Collaboration with Security Professionals: ChatGPT should be used as a collaborative tool alongside experienced security professionals. Combining human expertise with the insights provided by ChatGPT leads to more robust and accurate analysis.

- Augmenting Threat Intelligence Platforms: Integrate ChatGPT with existing threat intelligence platforms to enhance their capabilities. ChatGPT can assist in automating certain tasks, such as analyzing code snippets or generating reports, to streamline the analysis process.

- Feedback Loop for Model Improvement: Provide feedback on ChatGPT’s outputs to OpenAI or the model developers. This helps improve the model’s understanding of malicious code and enhances its analysis capabilities over time.

Conclusion

Analyzing malicious code is a critical aspect of cybersecurity, and leveraging AI technologies like ChatGPT can greatly assist in this process. By extracting code, interpreting its functionality, identifying vulnerabilities, analyzing behavior, and providing threat intelligence, ChatGPT can augment the capabilities of security researchers and analysts.

However, it is important to consider ethical considerations, understand the limitations of the model, and integrate ChatGPT with existing security infrastructure to ensure accurate and comprehensive analysis. With the right approach, collaboration, and continuous improvement, ChatGPT can be a valuable tool in the ongoing battle against cyber threats, enabling us to better understand and combat malicious code.

See Also:

- Malcure — Pioneers in Proactive Web Security

- Elon Musk, Apple, Bill Gates and Other High Profile Twitter Accounts Hacked in Cryptocurrency Scam

- Malcure WP CLI Integration & Cheatsheet

- YourService-Live & AdsNet-Work — Website Redirect-Causing JavaScript

- Cyber Cells Intercept Hackers Attempting to Access COVID-19 Patient Data